Aaron SmilesI am a commercially-minded [AI] researcher and business developer. I am an EngD candidate in Augmented Telepresence and 3D Computer Vision for Robot Ocean Plastic Telemanipulation at The Centre for Advanced Robotics @ Queen Mary (ARQ), part of the Human Augmentation & Interactive Robotics (HAIR) team, at Queen Mary University London. My EngD supervisors are Ildar Farkhatdinov and Changjae Oh. From 2011 to 2017, I ran a software startup developing VR audio-visual production tools for the Oculus Rift DK1, where I worked on visual spatial sound rendering in virtual environments. I hold an MRes in Digital Media (CS focus) from Newcastle University, where I was part of Culture Lab and a member of the paracuting club, and I hold an Executive MBA from Quantic School of Business and Technology. |

|

ResearchMy research interests include computer vision and deep learning, mixed reality, robot teleoperation, and ocean engineering. |

|

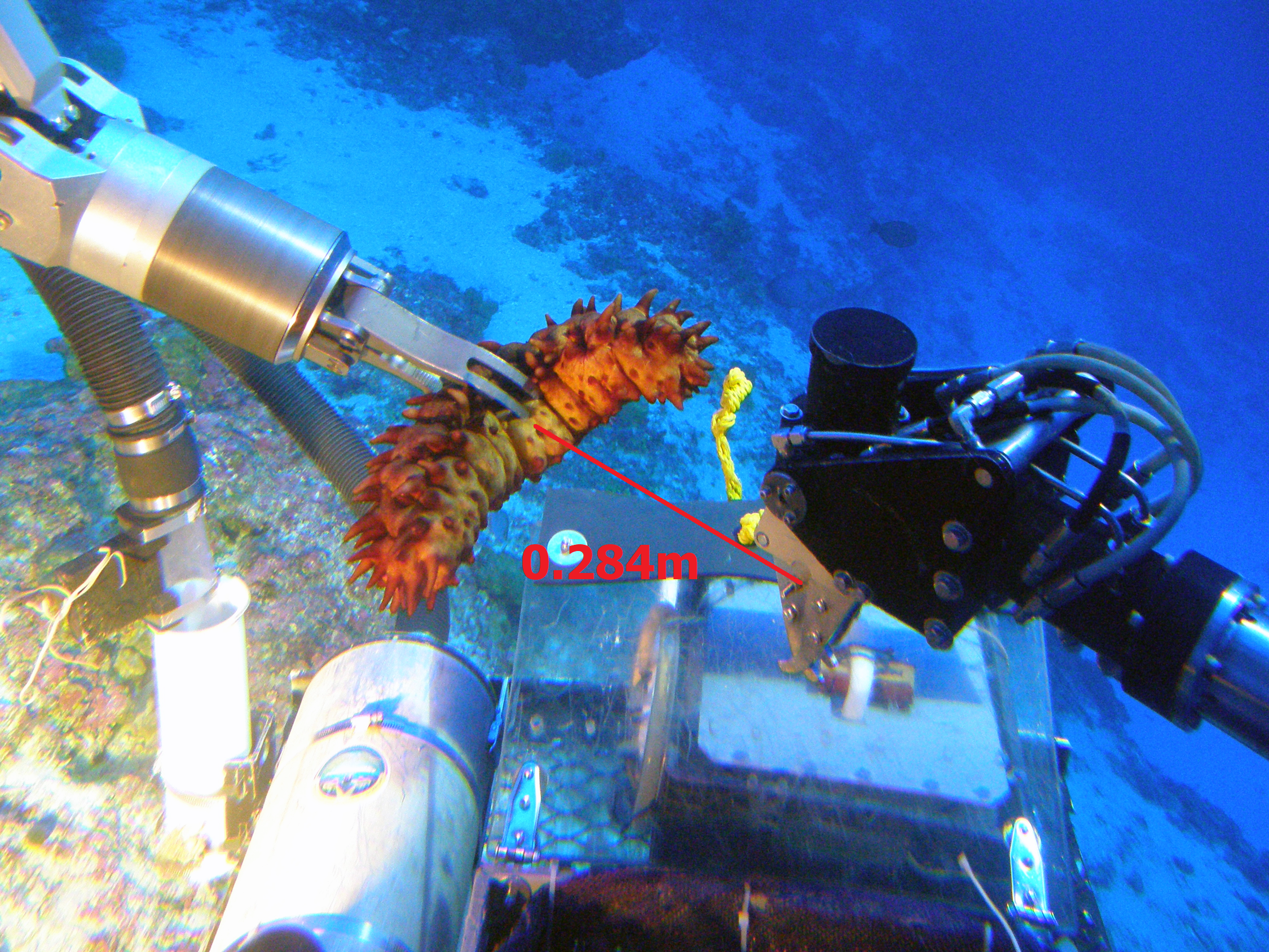

ATSEA: Augmented Telepresence in robot SEAfloor plastic cleanup (WIP)Aaron Smiles 2023-10 video / image / abstract / demo video 2 / Not enough robots are used in the fight against ocean plastics. ROVs controlled by human operators can be used to collect ocean waste, but human depth perception underwater is obscured, especially on 2D screens. This can be made worse when objects are transparent and backgrounds are near blank, common in underwater scenes. We present work on an underwater augmented, or mixed reality, telepresence system that utilises stereo vision for 3D plastic bottle object detection in varying lighting and turbidity conditions and audiovisual aids to assist with ROV telemanipulation during plastic waste collection. Initial tests with an unsynchronised pair of stereo cameras showed that block matching algorithms had difficulty finding matches with transparent materials. Using a synchronised stereo camera and a YOLO-based model, qualitative results are promising. We hypothesise that with our mixed reality telepresence system we can increase seafloor plastic bottle collection against traditional ROV end effector collection operation whilst reducing cognitive load. Supplementary material, including demo videos, can be found here: \url{https://github.com/aaronlsmiles/ATSEA}. |

|

Implementation of a Stereo Vision System for a Mixed Reality Robot Teleoperation Simulator (TAROS 2023, Cambridge, UK)Aaron Smiles 2023-07 code / poster / image / image 2 / poster / paper / This paper presents the preliminary work on a stereo vision system designed for a mixed reality-based simulator dedicated to robotic telemanipulation. The simulator encompasses a 3D visual display, stereo cameras, a desktop haptic interface, and a virtual model of a remote robotic manipulator. The integration of the stereo vision system enables accurate distance measurement in the remote environment and precise visual alignment between the cameras’ captured scene and the graphical representation of the virtual robot model. This paper delves into the technical aspects of the developed stereo system and shares the outcomes of its preliminary evaluation. |

ProjectsI am a creative technology entrepreneur and product manager with a passion for applied and commercialised research. Besides my research interests above, here's a sampling of my publicly disclosed work (more current work remains in stealth!): |

|

Suite of Music AI ToolsSoundVerse 2023-01 image / Managed the deployment of a suite of generative AI audio tools using music LLMs, transformers, and diffusion. I used Gradio to create a GUI wrapper and serve the models to the web with a user-friendly front end. The models were containerised using Docker and stored in GCP Artifact Registry before being deployed with a GPU VM instance in Compute Engine. |

|

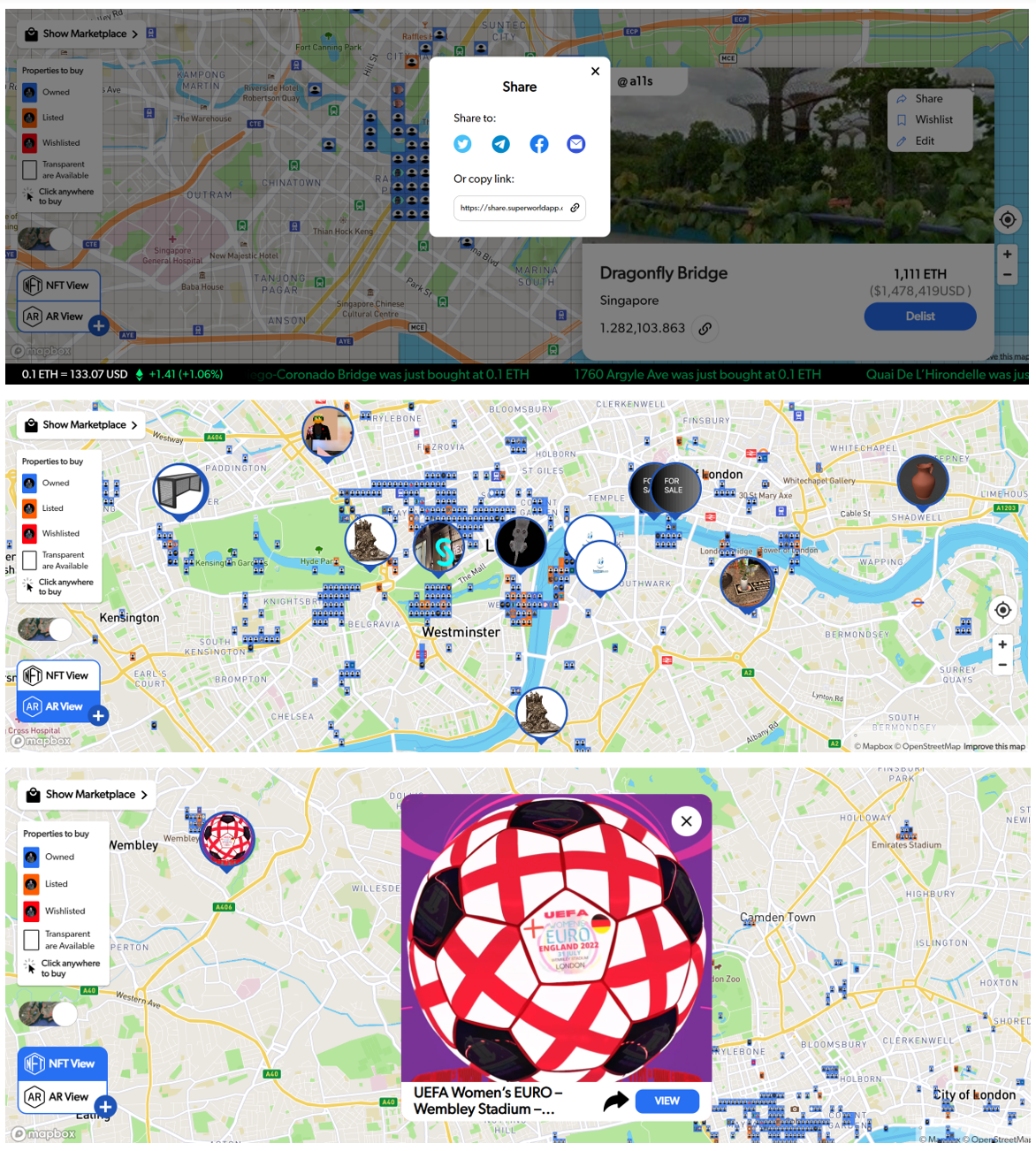

AR Real Estate MapSuperWorld 2020-02 image / image 2 / image 3 / image 4 / image 5 / image 6 / image 7 / image 8 / document / Whilst interning at SuperWorld as a Product Manager, I worked on the AR and NFT Real Estate Map. Strategised with the C‑Suite on growth and coordinated the Dev, Blockchain, and UX teams to complete weekly sprints, contributing to new initiatives and user stories for the web app, inc. social share of plot features, referral process and rewards programme, geolocation highlights of NFTs on the world map with gamification elements (e.g., easter egg hunts), SuperWorld related memes and custom emojis on the Discord server, and general alignment more towards NFT creators (with e.g., NFT Marketplace, artist feature pieces, etc.), which increased community engagement by over 40%. |

|

VReeMind - VR Phobia App (MBA Capstone)Quantic 2019-11 image / image 2 / image 3 / document / slides / sheets / sheets2 / sheets3 / sheets4 / Worked as a team of four on the MBA Capstone project to write a business plan, financial model, and product/service design for a new startup venture. We decided as a group to work on a business with a common good. Being a passionate technologist I pitched for whatever idea we decided on to leverage current SOTA technology to create our niche. From there, VReeMind, was born! A mental health app utilising virtual reality (VR) technology. I leaded the team by producing the product concept design, market placement using Blue Ocean Strategy and the strategy canvas (image2), branding (image3), and initial financial model (sheets2) and Cap Table/ROI analysis (sheets3). Our team accounting specialist and I worked together to complete the final financial model (sheets), and user model/pricing strategy (sheets4). |

|

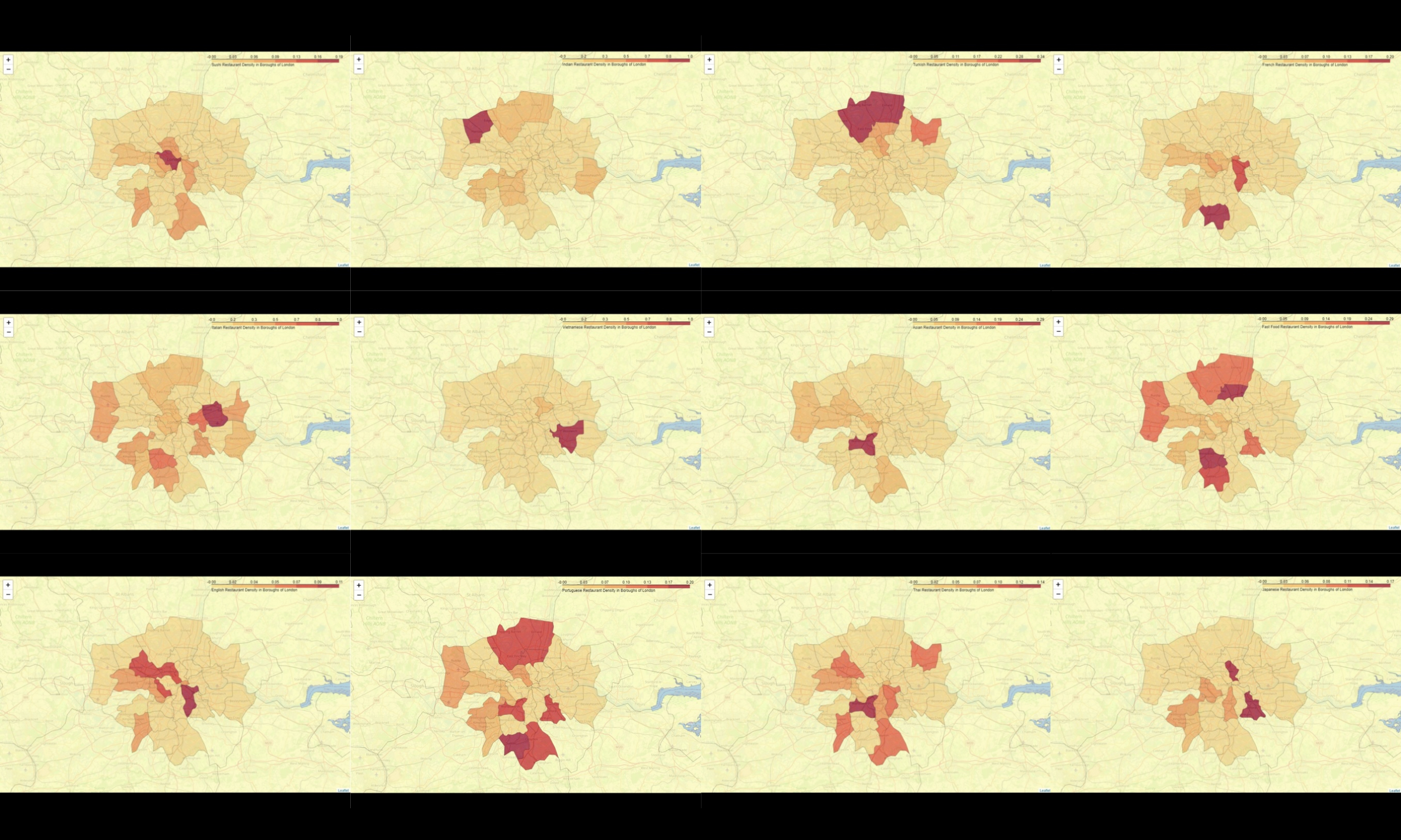

Battle of the Neighbourfoods 🌭IBM Professional Certificate in Data Science 2019-01 image / image 2 / code / document / Capstone assignment of the IBM Professional Certificate in Data Science on Coursera, using the Foursquare API to perform geolocation data analysis and visualisation. The goal of my approach was to investigate restaurants in London and cluster them based on different cuisines, then to heatmap the different boroughs based on the density of different cuisines per area. Following that I aimed to use the API call to check if which restaurants were still operating during COVID and visualise such data. However, there were not enough free API calls to achieve this properly, so I did my best with the data I had at hand, but the model is setup such that it would be easy to obtain with enough Foursquare API credits. |

|

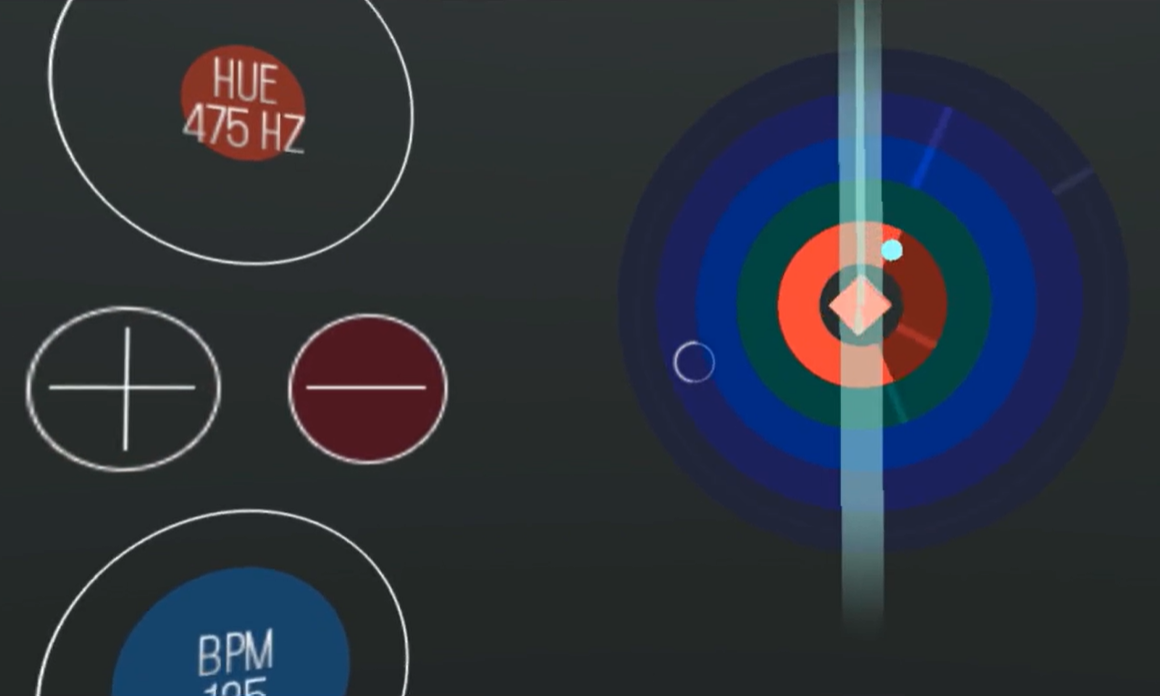

Sinetic AV - VR Visual-Audio Production Game InterfaceSinetic AV LTD 2015-11 patent / image / image 2 / document / video / Sinetic AV was a VR and gesture-controlled spatial visual-audio production software, for making spatial music in an immersive visual environment using visual objects moulded by hand. Visual properties, such as colour and shape, corresponded to audio properties, such as frequency and sound wave shape, respectively. Developed in Unity, the entire environment was the soundstage, and objects would occupy the soundstage as they were visually (e.g., larger objects would take up more of the soundstage), and the creator could walk around the soundstage, hearing their creation from different listening perspectives. They could also join together multiple environments (soundstages) to create an extended track or album that they could walk around in order to progress the musical piece. The environment and objects featured real-world physics (e.g., mass, gravity, magnetism, etc.) and could be interacted with or animated given such properties. The IP at the time was independently valued by Coller IP at over £69,000 (see document). This was heavily reduced by 25-45% due to early-stage risk factors and the fact that the software was a very basic prototype. Not a bad valuation for a spend of £8000 to create the IP. Full promo video: https://vimeo.com/155418055 |

Misc Design WorkI apply a modular, Lego-like approach to my design thinking process during ideation and prototyping, whether it's product design or software design. Here are some of my publicy disclosed work. These include smaller side projects, idea dumps, and unpublished design, marketing, and advertising briefs mockups. |

|

GPT's The Final Fantasy Game Design (TFF 2.0)personal 2022-11 image / document / document2 / document3 / An ongoing project using GPT to expand my game design from high school and eventually (hopefully) bring it to life, when GPT4 is released. Images and concept art done using Stable Diffusion. |

|

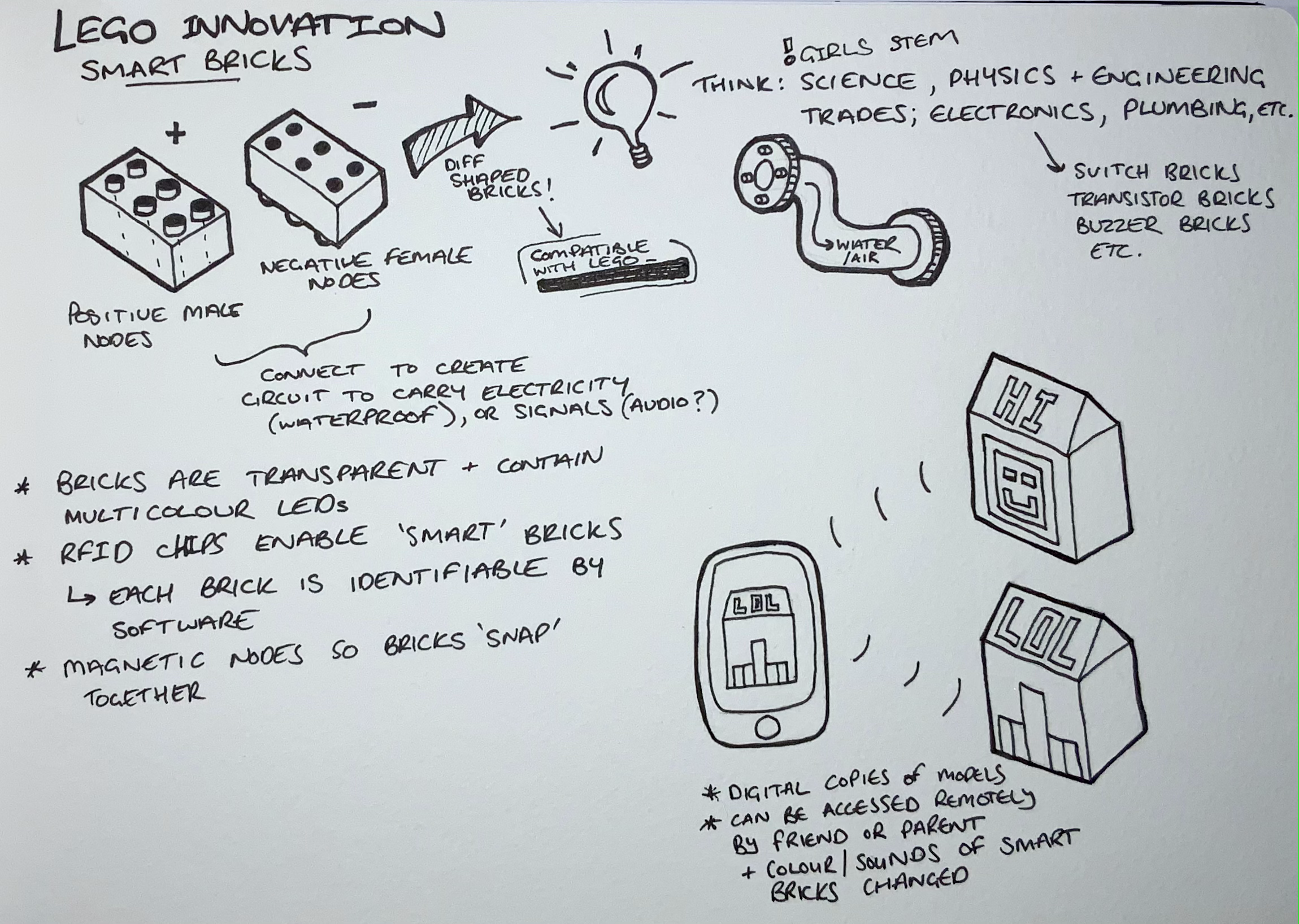

Lego Smart Bricks & Other Play Technology DesignsD&AD 2014-03 image / image 2 / image 3 / A sample of different play technology concept designs (inc. Lego smart bricks) I created for fun and for D&AD briefs for Lego and Hasbro. |

|

Logo Designsmisc. 2014-03 image / image 2 / image 3 / image 4 / image 5 / A sample of logo designs I’ve created and/or creatively directed. |

|

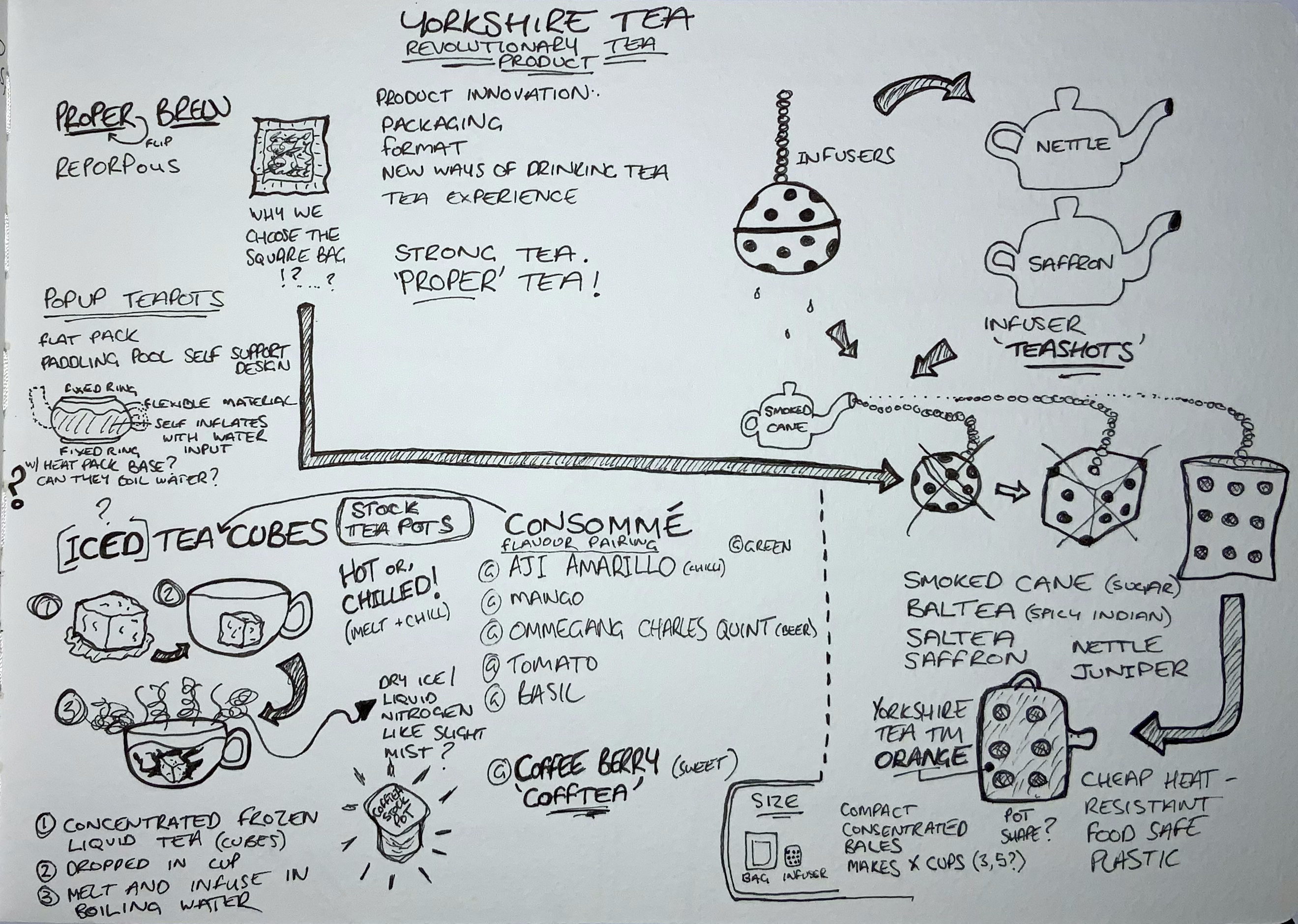

Food Technology DesignsD&AD/Intellectual Ventures 2014-03 image / image 2 / image 3 / image 4 / image 5 / image 6 / A sample of different food technology concept designs I created as part of different briefs for D&AD and Intellectual Ventures, for fun and for brands e.g., Yorkshire Tea and Taylors Coffee. |

|

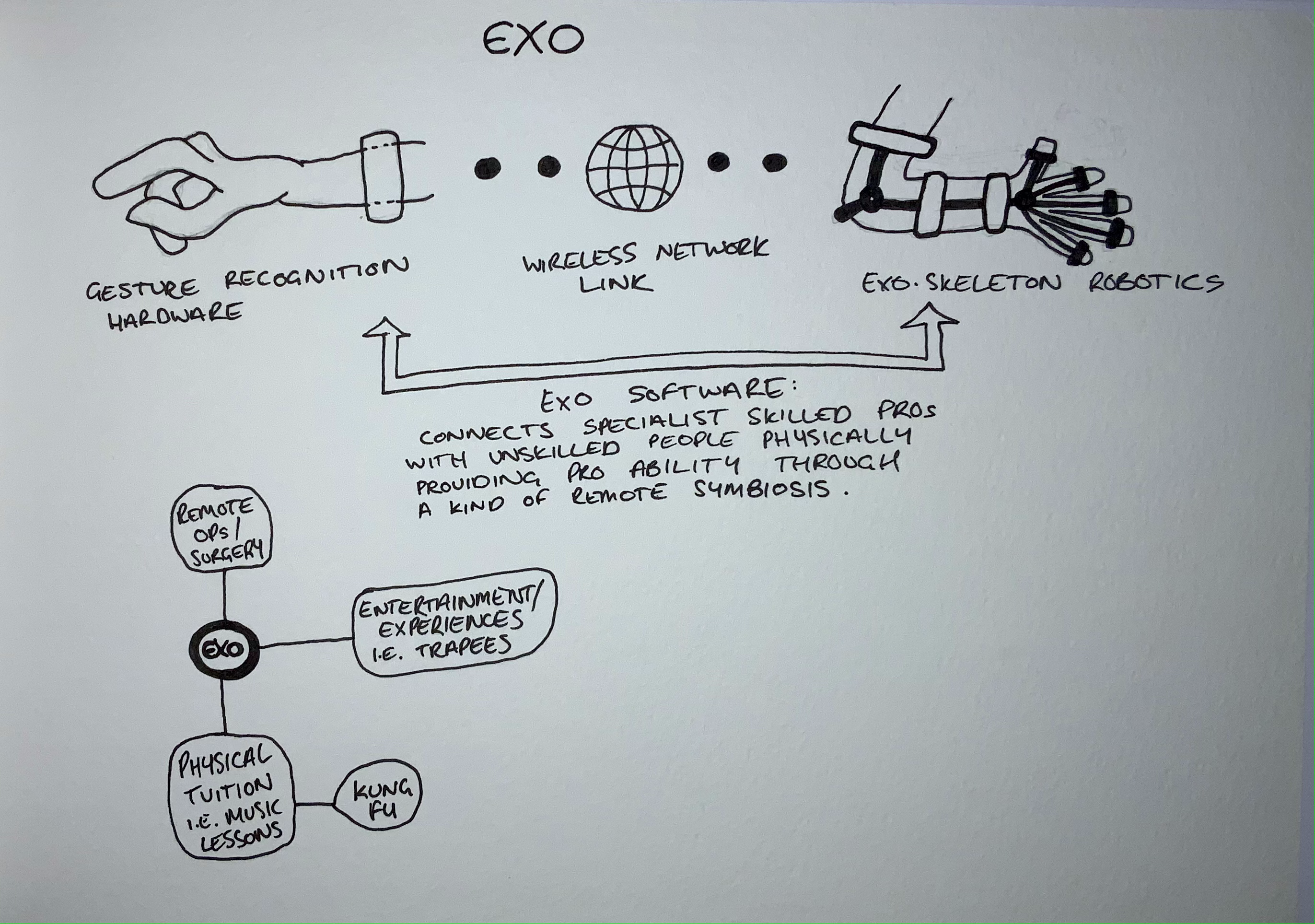

Haptic and Telerobotic Technology Designsmisc. 2013-11 image / image 2 / image 3 / A sample of different haptic and telerobotic technology concept designs I created years before I even got into robotics. |

|

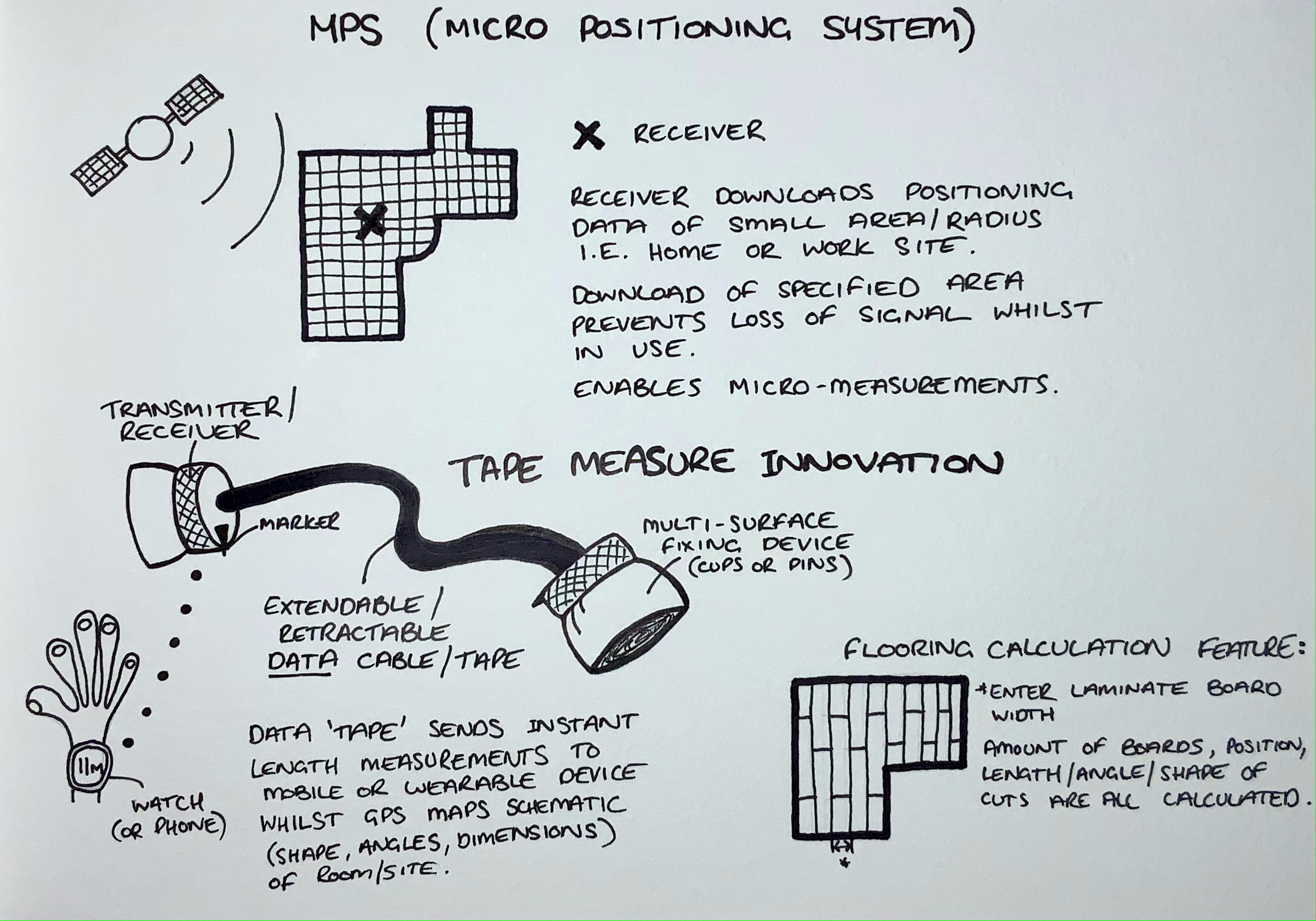

Tape Measure Innovation DesignD&AD 2013-09 image / A tape measure innovation concept for fun, based on a D&AD brief for a home hardware brand. |

|

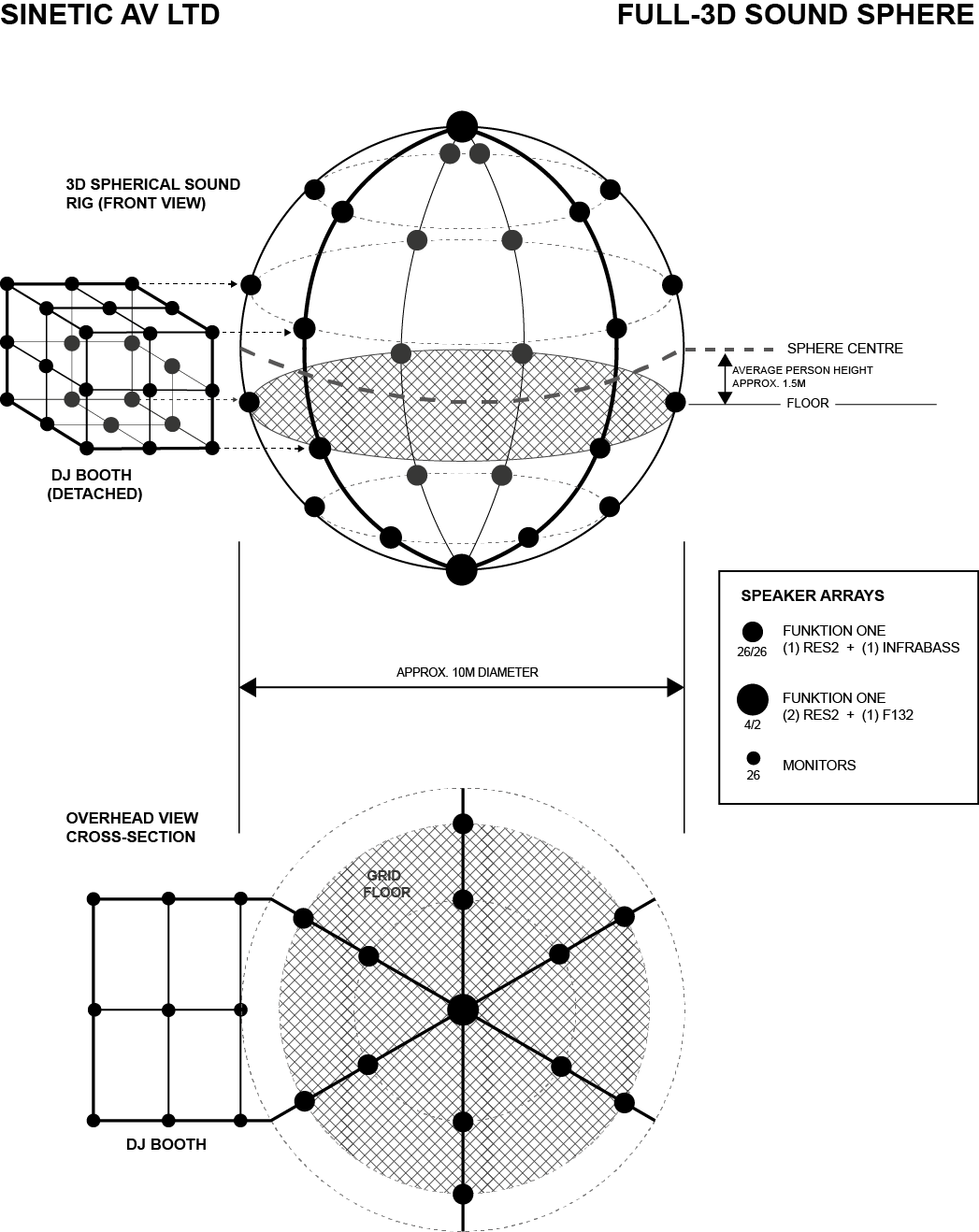

The AtomSinetic AV LTD 2013-06 image / image 2 / image 3 / The Atom was a spherical sound system concept born whilst developing Sinetic AV and imagining the best arena in which to perform music produced in the immersive Sinetic environment. |

|

The Final Fantasy Game Design (TFF 1.0)personal 2008-11 image / document / A collection of concept drawings, character designs, and storylines for a Final Fantasy inspired game that I lost myself in during the final couple years of secondary school (between 1997-2001, digitised in 2008) whilst absorbed in FF7. |

|

Design and source code from Jon Barron's website adapted using Leonid Keselman's Jekyll fork. |